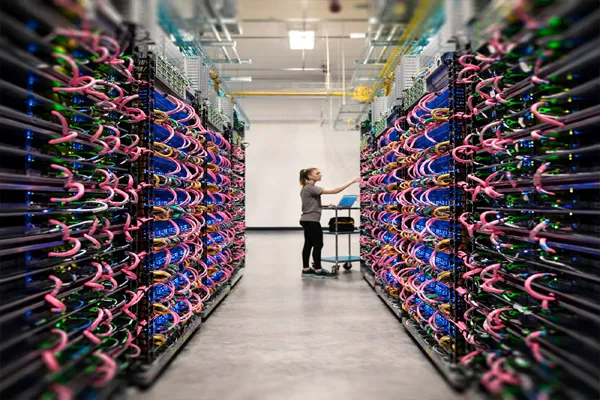

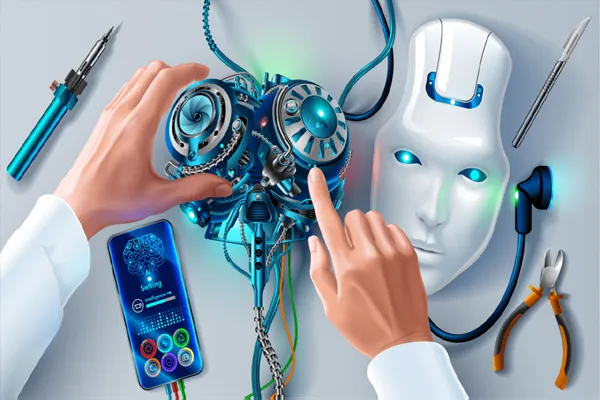

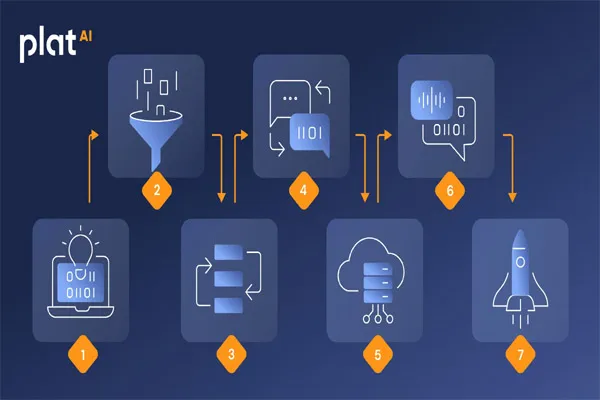

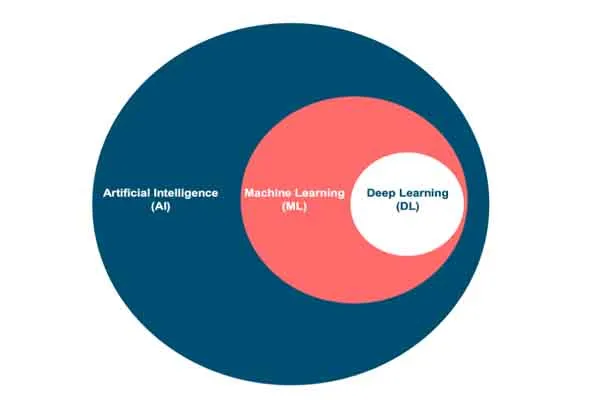

AI-generated images are created using advanced machine learning models, typically based on techniques like Generative Adversarial Networks (GANs), diffusion models, or transformer-based architectures.

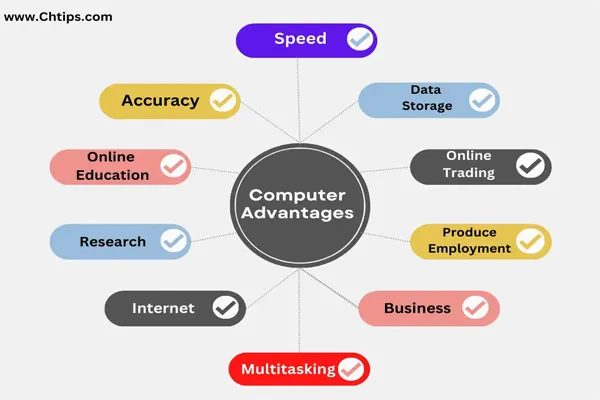

- Training the Model: The AI is trained on massive datasets of images (e.g., photos, artwork, or other visuals) paired with descriptions or labels. It learns patterns, styles, and features—like shapes, colors, textures, and compositions—by analyzing these examples.

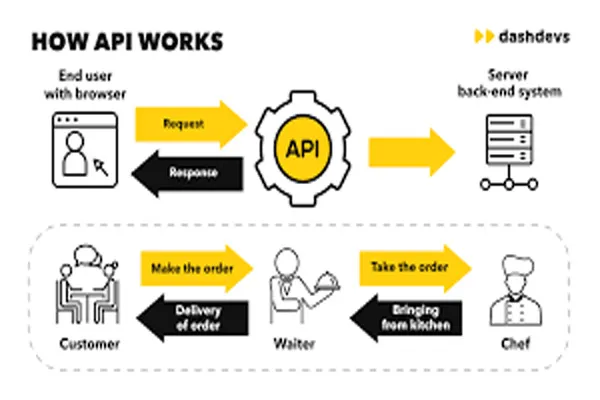

- Input Processing: When you give the AI a prompt (e.g., "a cat in a spacesuit"), it interprets the text using natural language processing to understand what you’re asking for.

- Image Creation:

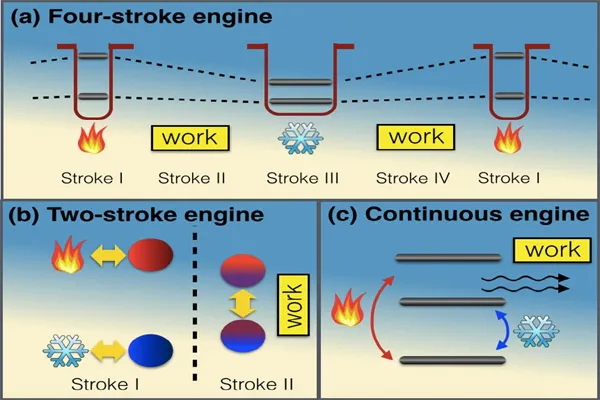

- GANs: Two neural networks—a generator and a discriminator—work together. The generator creates an image from random noise, while the discriminator evaluates it against real images. They compete, refining the output until it looks realistic.

- Diffusion Models: These start with random noise and gradually "denoise" it step-by-step, guided by the prompt, until a coherent image emerges.

- Other Methods: Some models, like those based on transformers, predict pixel values or image features directly from the prompt.

- Output: The AI produces an image that matches the prompt as closely as possible, based on what it learned during training. The quality depends on the model, the dataset, and the specificity of your input.