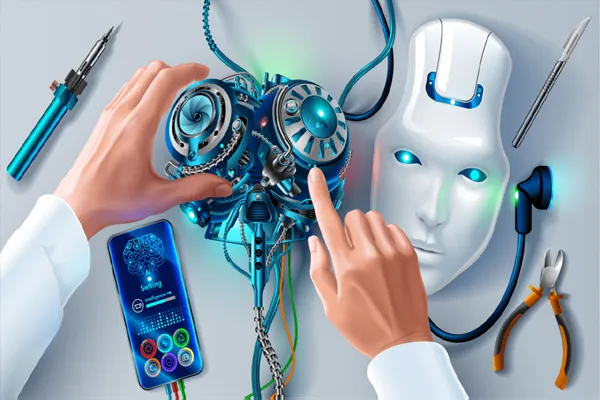

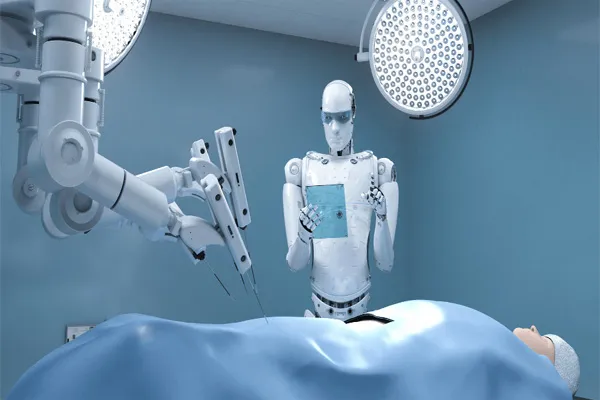

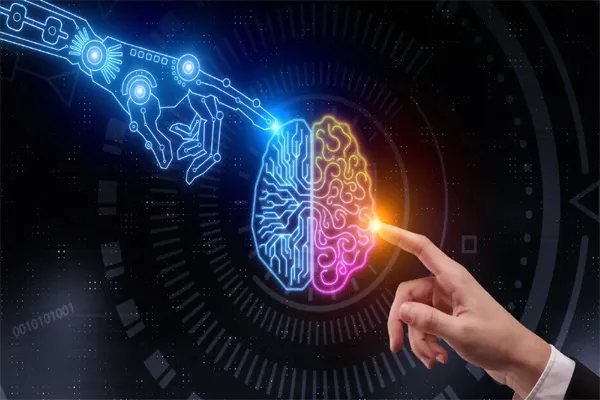

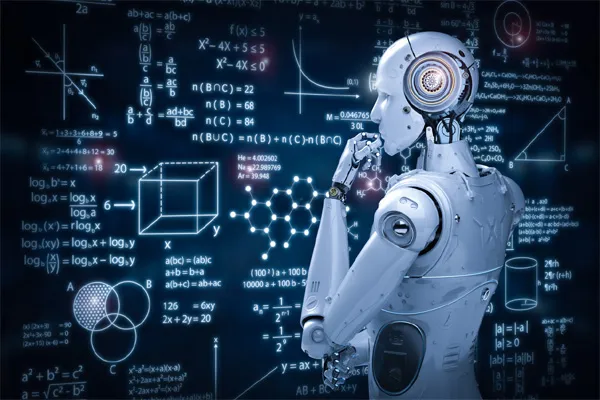

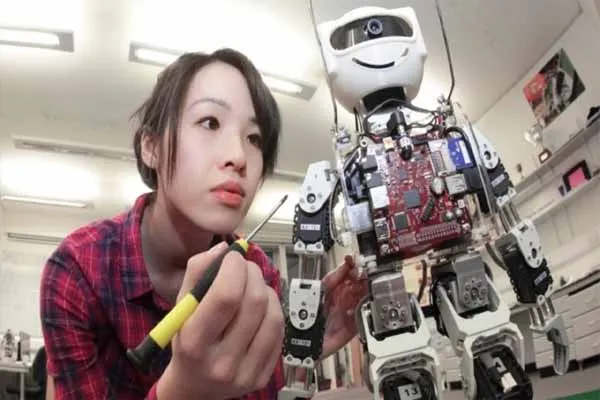

Robotics and artificial intelligence (AI) are transforming industries, from manufacturing to healthcare, by enabling machines to perform complex tasks with precision and autonomy. But how does AI initiate actions in robots? This process involves a seamless integration of perception, decision-making, and execution, powered by advanced algorithms and hardware. In this article, we’ll explore the step-by-step mechanism of how AI drives robotic actions, the technologies behind it, and real-world examples that showcase its impact.

1. The Foundation: AI and Robotics Integration

At its core, a robot is a physical system equipped with sensors, actuators, and a control unit. AI acts as the "brain" that processes data and makes decisions, enabling the robot to interact with its environment. The initiation of a robotic action begins with AI interpreting inputs and generating commands for the robot to execute.

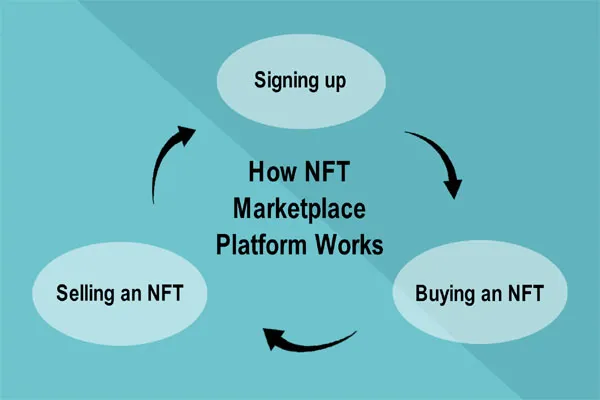

2. The Process: How AI Initiates Robotic Action

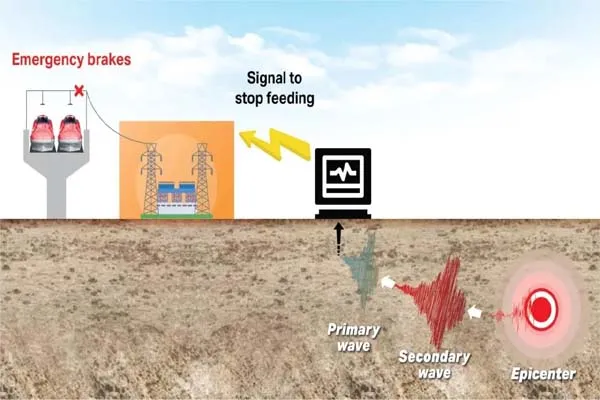

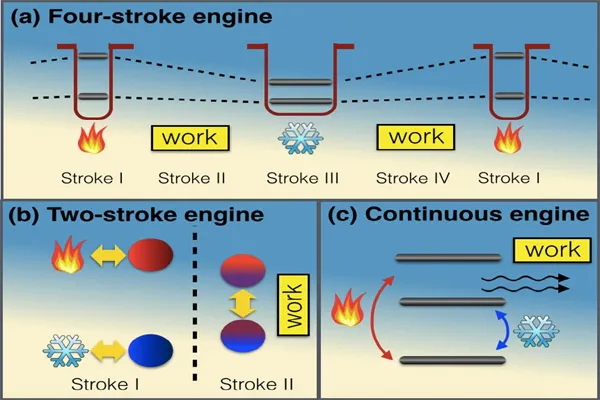

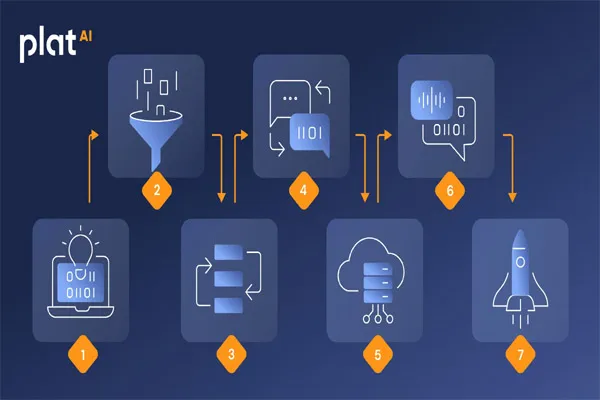

The journey from an environmental stimulus to a robotic action can be broken down into a cyclical process often referred to as the perception-decision-action loop

- Step 1: Perception (Data Collection and Processing)

- Step 2: Decision-Making (Planning and Reasoning)

- Step 3: Action (Execution of Commands)

- Step 4: Learning and Improvement

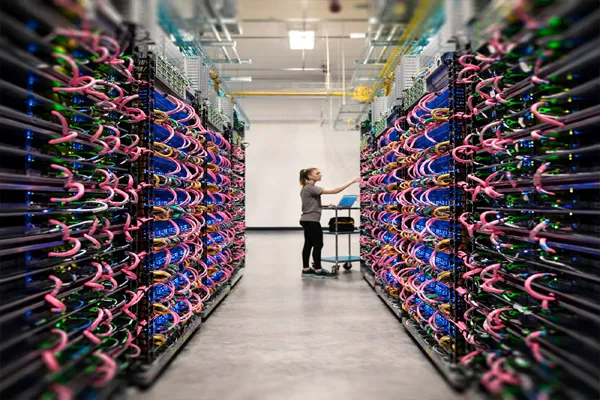

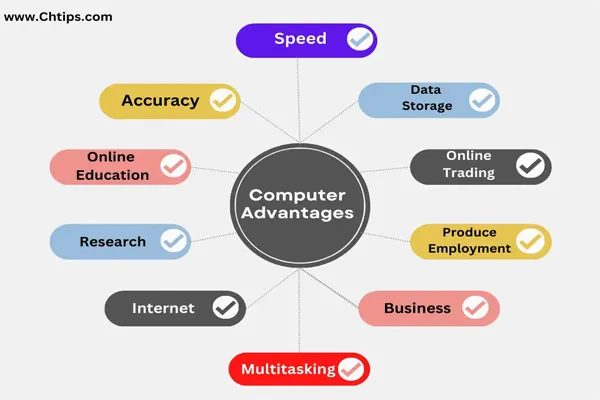

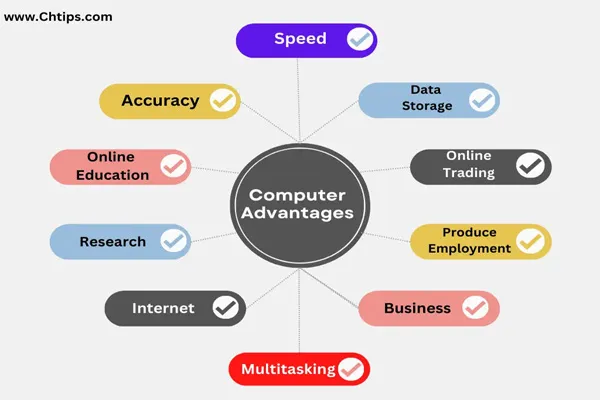

3. Key Technologies Powering AI-Initiated Robotics

Several cutting-edge technologies enable AI to initiate robotic actions effectively:

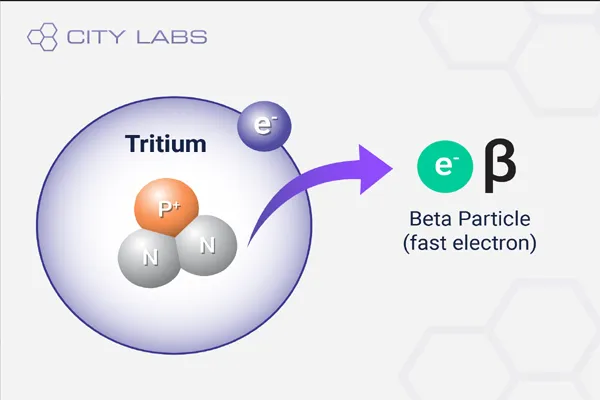

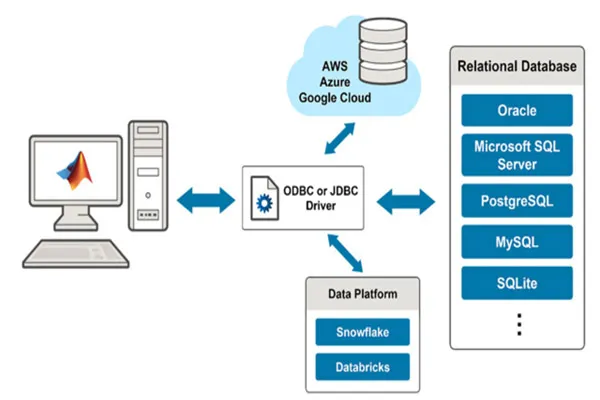

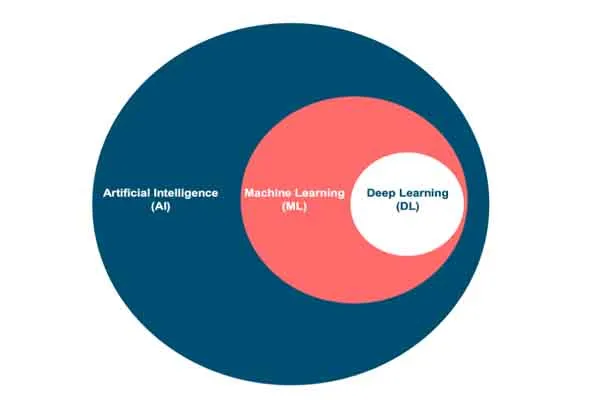

- Machine Learning and Deep Learning: Neural networks, especially convolutional neural networks (CNNs) for vision and recurrent neural networks (RNNs) for sequential tasks, allow robots to interpret complex data.

- Reinforcement Learning: Robots learn optimal actions through trial and error, ideal for tasks like robotic locomotion or game-playing robots.

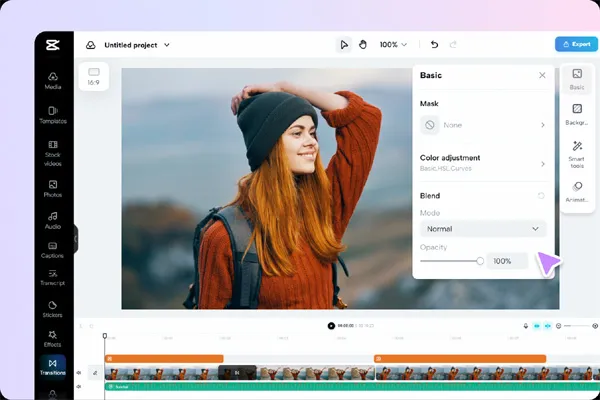

- Computer Vision: Enables robots to "see" and understand visual inputs, critical for tasks like object recognition or facial detection.

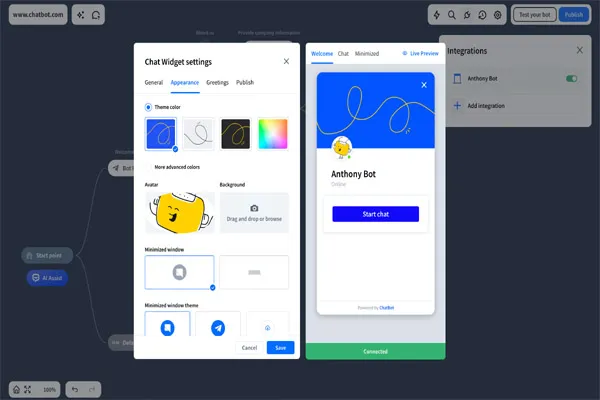

- Natural Language Processing (NLP): Allows robots to interpret voice commands or text, as seen in AI assistants like robotic customer service agents.

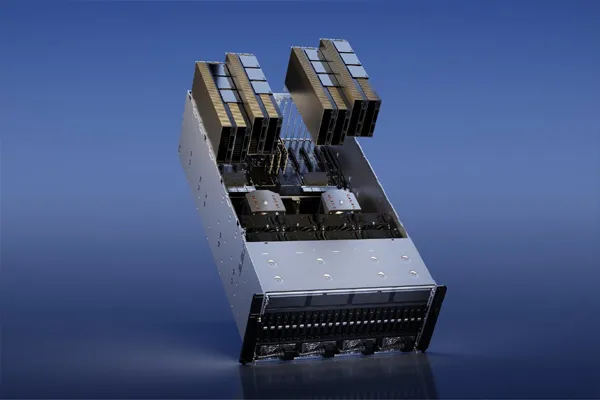

- Edge Computing: AI processing on the robot itself (rather than in the cloud) reduces latency, enabling real-time actions in applications like drones.

- Robotics Operating System (ROS): A framework that simplifies communication between AI algorithms, sensors, and actuators.

4. Real-World Applications

AI-initiated robotic actions are already reshaping industries and daily life.

- Manufacturing: AI-powered robotic arms assemble cars with precision, adapting to variations in parts or unexpected obstacles. Companies like Tesla use AI to optimize factory workflows.

- Healthcare: Surgical robots, such as the da Vinci system, use AI to assist surgeons by translating their inputs into steady, precise movements, improving patient outcomes.

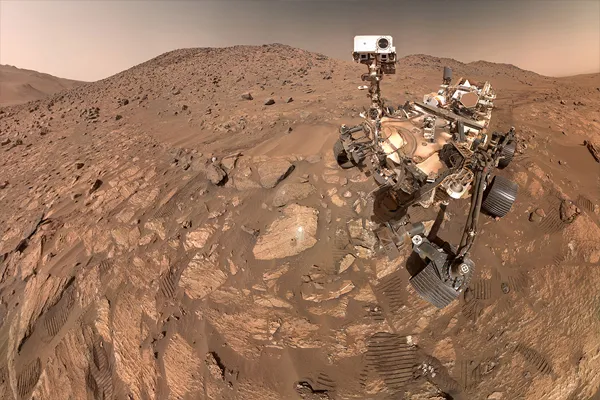

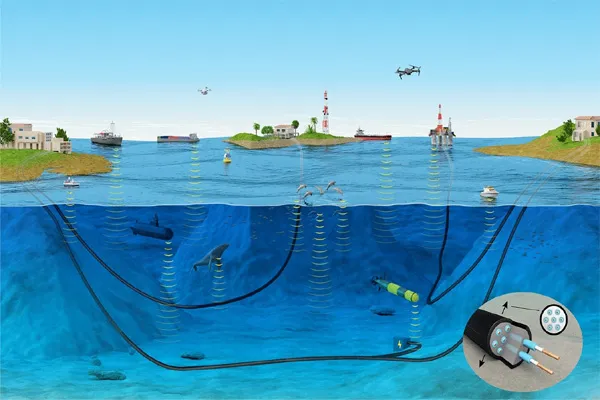

- Logistics: Autonomous drones and delivery robots, like those developed by Starship Technologies, use AI to navigate sidewalks and deliver packages to customers.

- Agriculture: AI-driven robots plant seeds, monitor crops, and harvest produce with minimal human intervention, increasing efficiency in farms.

- Service Industry: Robots like SoftBank’s Pepper use NLP and AI to interact with customers, answering questions and performing tasks in retail or hospitality settings.

5. Challenges and Future Directions

While AI-driven robotics is advancing rapidly, challenges remain:

- Complexity of Environments: Unpredictable or dynamic settings (e.g., crowded streets) can confuse AI, requiring more robust algorithms.

- Energy Efficiency: Running AI models on robots demands significant power, limiting battery life for mobile robots.

- Ethical Considerations: Autonomous robots raise questions about accountability, especially in critical applications like military drones or self-driving cars.

- Cost: High-end sensors and computing hardware can be expensive, though costs are decreasing over time.