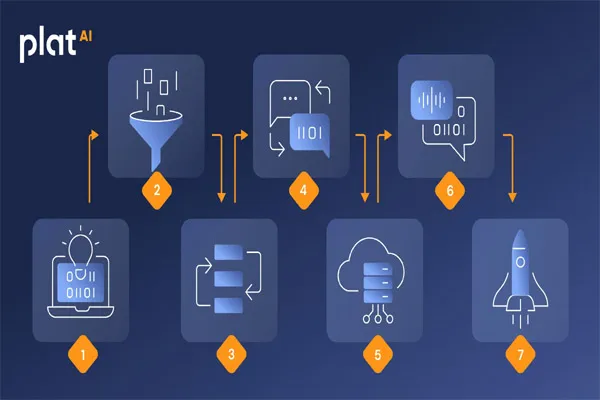

1. Training Phase

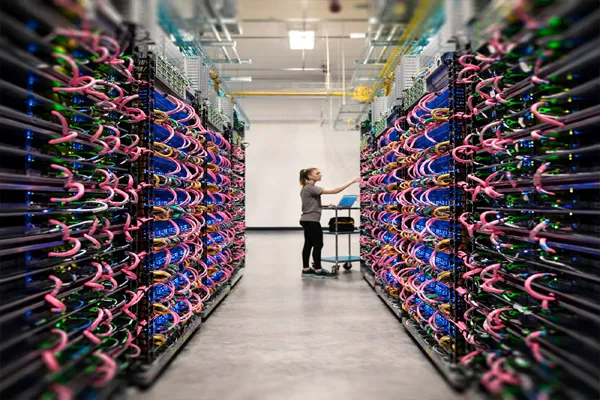

- Data Collection: The first step involves gathering a large dataset relevant to the task. For instance, if you're training a generative AI to create text, you might collect a dataset of books, articles, and other text sources.

- Model Training: A neural network, often a type called a Transformer (e.g., GPT for text, GANs for images), is trained on this dataset. The model learns to predict the next word in a sentence, the next pixel in an image, or other relevant predictions based on the data it has seen. This training process involves adjusting the model’s parameters (weights) to minimize the difference between its predictions and the actual data.

2. Generation Phase

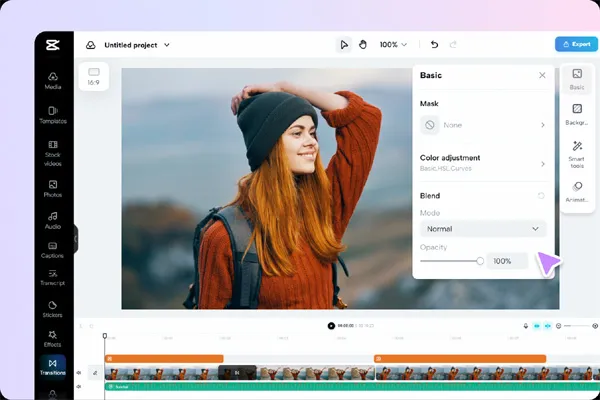

- Prompting: Once trained, the generative AI can create new content. The user provides an input or "prompt" (e.g., a sentence starter or an initial image), and the model generates new content based on this input.

- Sampling: The model generates outputs by sampling from the probabilities it has learned. For text, it might generate the next word in a sequence; for images, it might generate the next pixel or color pattern. The generation can be deterministic or involve some randomness to create more varied outputs.

3. Refinement

- Iteration: Users often refine the generated content by tweaking the input prompts or by running the model multiple times to get different variations.

- Fine-Tuning: The model can be fine-tuned on specific tasks or datasets to improve its performance in particular areas, making it more specialized.

Types of Generative Models

- Generative Adversarial Networks (GANs): Commonly used for generating images. GANs consist of two networks, a generator and a discriminator, that work against each other to produce more realistic images over time.

- Transformers: Used widely for text generation. Examples include OpenAI's GPT models, which generate coherent and contextually relevant text based on prompts.

- Variational Autoencoders (VAEs): These models generate new data by learning the distribution of the input data and sampling from it.

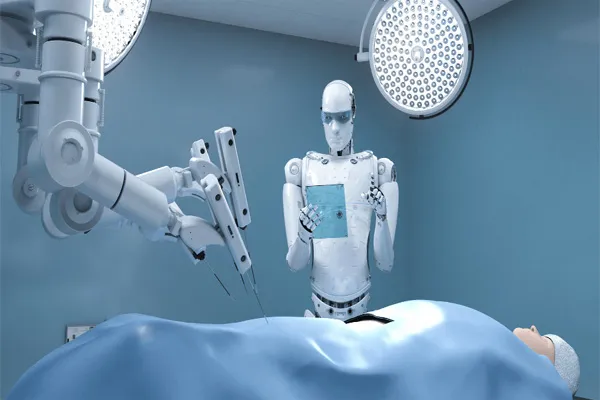

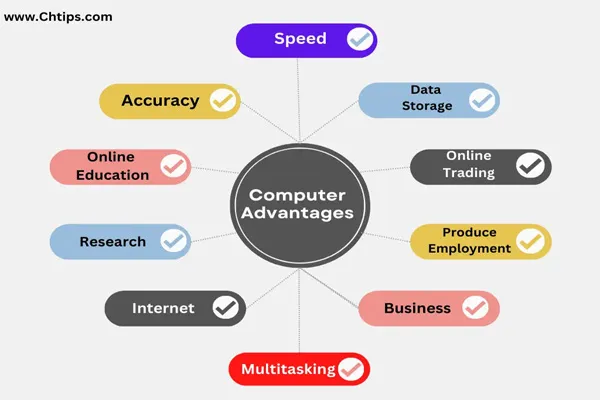

Applications

- Text Generation: Writing articles, stories, or even code.

- Image Generation: Creating artwork, designing products, or generating realistic faces.

- Music and Audio: Composing music or generating human-like speech.

- Video Game Development: Creating characters, landscapes, and narratives.

Challenges

- Quality Control: Ensuring the generated content is coherent and of high quality.

- Ethical Concerns: Addressing potential misuse, such as generating fake news or deepfakes.

- Bias: The AI might generate biased or inappropriate content if the training data contains biases.